Game Changer or Game Over? Get Ready for GPT-3

As AI gets increasingly advanced, with the ability to write coherent text and even code, should content creators fear being rendered obsolete in the years to come?

If you hang out on the fringes of the Internet you may have seen very smart people losing their minds over something called GPT-3.

GPT-3 is an artificially intelligent platform that uses information it has scraped from the Internet to create incredibly human-like conversations, stories, designs and so much more. It’s cool, it’s creepy and it could change the world.

Billionaire Chris Sacca is one of those smart people who had his mind blown. Sacca was an early investor in companies like Twitter, Uber and Instagram so he knows a thing or two about game changers. This was his take.

“I remember when and where I was when I played Pong the first time. Same for my first Google query. Like those massive leaps forward, I’ll never forget my first time using GPT-3. Every bit of the hype is deserved.”

Take careful notice to what he said next.

“This is not hyperbole or pastebait. I actually think GPT-3 could change everything, and I’m confident we will see massive economic, educational, and scientific shifts within the next 5–10 years as a direct result of this technology.”

Actually, Sacca didn’t say that second part. That’s what GPT-3 came up with when Sacca submitted his quote to GPT-3 and prompted it to say more. Could you tell the difference? (Do note that for all the examples, we’ve kept the text from GPT-3 exactly as it is, mistakes and all.)

Ghandi, meet Genghis

For a layman to really appreciate GPT-3, it really helps to skim through the blogs and Twitter feeds of beta-testers (because GPT-3 isn’t available to the public yet) and see for yourself. Here are some examples we found.

One tester asked GPT-3 to create a conversation between Ghandi and Genghis Khan. This is what it said, within seconds:

“War is not the answer,” says Ghandi. “The death of one person can not be justified by the fall of another. The cycle must stop.”

“Bah,” says Genghis. “Are you woman? The weak prefer diplomacy to warfare. It is obvious you have never been in a real battle. When the sword taketh away, it giveth even more. You cannot afford to be weak. Only the strong survive.”

And their conversation goes on like this for several paragraphs.

Another tester asked GPT-3 to rewrite text from Harry Potter in the style of other writers like Ernest Hemingway, Jane Austen and Arthur Conan Doyle. They’re creepily good.

By Ernest Hemingway:

“It was a cold day on Privet Drive. A child cried. Harry felt nothing. He was dryer than dust. He had been silent too long. He had not felt love. He had scarcely felt hate. Yet the Dementor’s Kiss killed nothing. Death didn’t leave him less dead than he had been a second before. It wasn’t about living or dying really. It wasn’t about death. It was about the Dark Lord, his creator, his engenderer. He was not resurected. He was created again.”

By Jane Austen:

“It is a truth universally acknowledged, that a broken Harry is in want of a book. This he knows to his cost; pressing that wretched nose against the window of a bookstore in Diagon Alley the last thing before he goes into hiding for several years, where upon, he goes straight to Azkaban.”

Email, websites, balance sheets and more

So how does GPT-3 work?

For starters, it has analysed almost a trillion words on the Internet. When it receives a question or request, it looks for patterns in all this data that allow it to make a best guess of what words should follow the question or request. It has no understanding of what these words mean — it’s not intelligent — it’s just really good at matching patterns.

We mean really good.

One company is already figuring out how GPT-3 can be used to instantly reply to long and complicated emails while mimicking your style of writing so no one is the wiser. Check out a great example of how this works (some waiting is involved):

Another tester decided to see what GPT-3 could do with dense and often incomprehensible legal documents. It could do a lot, it turns out. With just a couple examples to train on, GPT-3 started turning legalese into very clear and concise language.

And there’s so much more. Web designers are noticing that GPT-3 is able to create web pages with just a few simple text instructions. For example, when someone asked GPT-3 to create a page with a search box and a subscribe button that looks like a watermelon, that’s exactly what you get. In seconds.

Another tester taught GPT-3 to turn simple text instructions into very sharp-looking balance sheets. Instantly. Another configured GPT-3 to scan ingredient labels on food packaging and tell him if the contents were healthy.

In bad hands

There are so many great examples that promise to make our lives easier. But as with any amazing new tool, bad people are going to do bad things.

SEO, for example, might change overnight. Currently, Google search favours long-form content when it calculates its rankings. But if GPT-3 can churn out thousands of long-form stories within seconds, what does that mean for people trying to find the best results for their search?

Disinformation will get worse as GPT-3 can produce very convincing arguments using fake inputs. And how will schools prevent students from using GPT-3 to write their essays? If it can replicate the style of Austen or Hemingway, it’s not going to have a problem with the style of student John Smith in Ms McCurdle’s grade 11 history class.

The ramifications here are quite frightening.

It’s not perfect

But before any writers, teachers, content creators and web designers are overcome with despair, relax, the beta-testers are also finding that GPT-3 has significant limitations.

Sometimes it sounds quite stupid. Consider this example.

Q. Which is heavier, a toaster or a pencil?

A. A pencil is heavier than a toaster.

A human immediately sees this as nonsense. But GPT-3 got it wrong because it couldn’t find a comparison on the Internet for a toaster and a pencil. Worse, it didn’t skip the question or say it didn’t know.

GPT-3 also has problems sustaining long and cohesive narratives. Because it uses one chunk of text to find the next chunk of text that is then used to find another piece of text, it often drifts away from the initial point.

Some testers who have spent a lot of time with GPT-3 are also saying they are learning to spot text generated by the AI. It’s not something they can put their finger on. The human brain is just really good at spotting content not written by a human. For now anyway.

The future

GPT-3 was created by OpenAI, an artificial intelligence lab established by founders including Elon Musk and Sam Altman, to ensure AI will benefit humanity. Some form of GPT-3 is expected to be free or low-cost when it’s finally made available to the public.

When it does launch, many jobs held by humans today, particularly in content creation, will be threatened by GPT-3’s ability to generate an incredible amount of text about almost any subject, as well as its prowess with website design and spreadsheets.

But some skills will become more important. Let’s look at a few.

- Editing: As we’ve seen, GPT-3 often generates text that is incoherent or just wrong. The ability to sift and shape rough copy into highly polished final drafts will be a valued skill that differentiates the good users of GPT-3 from the bad.

- Asking the right questions: The quality of the information you get on the Internet today is usually dependent on how you search. For example, if a student is asked to write an essay about lions, they’re not going to get something unique if they ask ‘What is a lion?’ But if the student asks ‘Are there any lions outside of Africa?’ they will get material about a small population of Asiatic lions still hanging on in the Gir forest of India. The ability to ask a great question will be much more important than knowing the answer because GPT-3 will usually have that answer.

- Creating new information: GPT-3 cannot create new information. It only recreates information it finds on the Internet. So original content like interviews and surveys become more valuable, as does new ways of comparing information. For example, we saw GPT-3 stumble when asked to compare a toaster with a pencil because this comparison hadn’t been made on the Internet before.

- Authenticity: This is already gaining importance in a world flooded with information. People want to connect with other people and brands in ways that feel authentic and intimate. While GPT-3 will do a much better job at faking this authenticity, it’s likely that consumers and people in general will just become hungrier for the real thing, and that’s something only a real person can do.

As GPT-3 continues to be tested, there will be new discoveries — and new concerns. Some will be amazing and some will be frightening. But it’s really just the beginning.

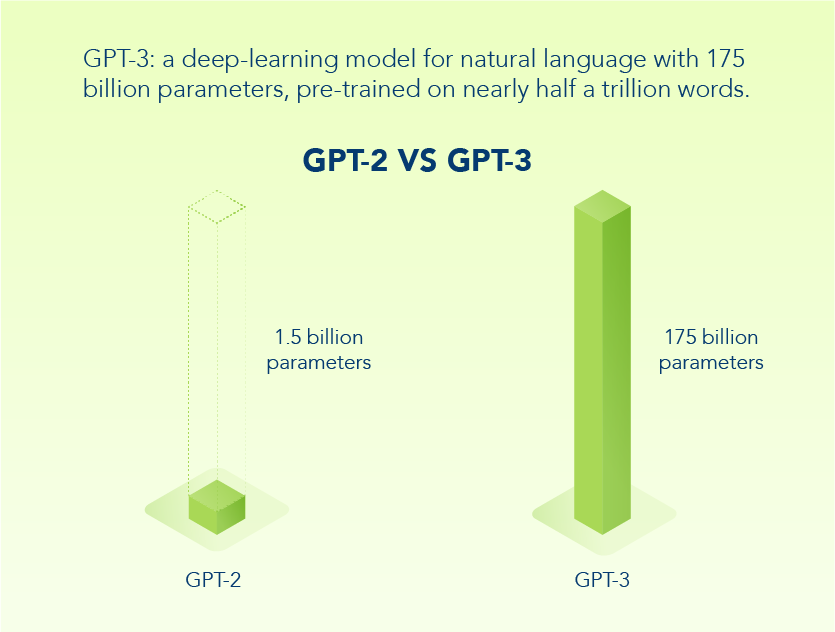

While GPT-3 uses 175 billion parameters to generate answers it finds on the Internet, people are already talking about new versions using over 1 trillion parameters.

By that time we won’t be laughing at its mistakes with toasters and pencils. It might be laughing at us.